A slow but steady revolution is occurring in the world of learning. If you have a child between the ages of 5 and 18 living at home, you’re probably seeing it unfold every day. Want to confirm you got your math problem correct? Just ask Siri. Need to understand how weather balloons work for a science project? Check out The Weather Channel Kids Web site. Forgot your homework assignment? Ask a friend to snap it and send it on Instagram.

The future of learning is here and it’s digital, social, continuous, and highly immersive. For companies, traditional training methods, such as classrooms, are still relevant, but they are no longer the prime delivery method for learning. They are slow to set up, are expensive, and consume too many productive hours. Many companies are beginning to view the classroom as a strategy for customized educational needs, such as corporate strategy or branding.

The future of learning is here and it’s digital, social, continuous, and highly immersive. For companies, traditional training methods, such as classrooms, are still relevant, but they are no longer the prime delivery method for learning. They are slow to set up, are expensive, and consume too many productive hours. Many companies are beginning to view the classroom as a strategy for customized educational needs, such as corporate strategy or branding.

Static online-learning tools, such as asynchronous simulations and narrated slide decks, are not engaging enough to be effective as a replacement for live training, however. Meanwhile, many employees are unable to keep up with technological advances that affect their everyday work processes. Because knowledge becomes obsolete so quickly, people need continuous, always-on learning.

CGI, a global IT consulting company with 68,000 employees, was struggling with this very problem. Classroom training for consultants couldn’t keep up with the education required to service clients with sophisticated technology needs. CGI adopted a cloud-based learning platform to bridge the gap. The system, which can be personalized to the learner, includes video-based courses and online-learning rooms to foster social learning opportunities with other students and instructors. CGI is now training 50% more consultants, and learners are consuming 50% more training content than in the past.

The move to continuous, on-demand learning is also saving CGI money and enabling it to onboard new consultants faster. “It is a ‘moment of need’ reference tool that helps our employees in their day-to-day tasks,” says Bernd Knobel, a director at CGI.

Workforce and economic drivers for learning transformation

Learning needs are growing across all disciplines of content due to the speed of globalization, competition, and new disruptive business practices. During the fallout from the 2008 global recession, companies scaled back on organizational development, but that’s beginning to change as companies struggle to rebuild their businesses, says Josef Bastian, a senior learning performance consultant with Alteris Group.

The same forces that drove CGI to abandon the classroom are being felt across industries. The main drivers for change include:

1. Creating competitive advantage

Uber, Netflix, Amazon, Airbnb, Bloom Energy, and health insurer Oscar are among the companies considered highly disruptive in their markets today. They achieved innovation and market share by looking ahead and taking advantage of new technologies faster than competitors or in novel ways. Digital learning enables companies to stay ahead of the curve. Companies need to understand the new technologies before they are even available, so that they can understand the impact on the business and even invent new business models.

Uber, Netflix, Amazon, Airbnb, Bloom Energy, and health insurer Oscar are among the companies considered highly disruptive in their markets today. They achieved innovation and market share by looking ahead and taking advantage of new technologies faster than competitors or in novel ways. Digital learning enables companies to stay ahead of the curve. Companies need to understand the new technologies before they are even available, so that they can understand the impact on the business and even invent new business models.

2. Closing the skills gap

We are now in an era that will rival the Industrial Age in terms of transformation. For example, a financial analyst today needs to know how to work with Big Data, including how to ask the right questions and how to use the related information systems. Jim Carroll, a speaker, consultant, and author on business transformation, uses the automotive industry as one rubric for change. “You’ve got folks who are struggling with all this new high-tech gear inside the car or the dashboard,” says Carroll. “And you look at a typical auto dealer or the person manufacturing a car, and the knowledge they need to do their job today is infinitely more complex than it was even 5 or 10 years ago.”

We are now in an era that will rival the Industrial Age in terms of transformation. For example, a financial analyst today needs to know how to work with Big Data, including how to ask the right questions and how to use the related information systems. Jim Carroll, a speaker, consultant, and author on business transformation, uses the automotive industry as one rubric for change. “You’ve got folks who are struggling with all this new high-tech gear inside the car or the dashboard,” says Carroll. “And you look at a typical auto dealer or the person manufacturing a car, and the knowledge they need to do their job today is infinitely more complex than it was even 5 or 10 years ago.”

3. Retaining and motivating a new workforce

By 2025, Millennials will make up 75% of the workforce, according to the Brookings Institution. Various studies have shown that Millennials crave learning and collaboration and will do whatever it takes to get the information they need expediently. “I’ve got two sons who are 20 and 22 and they seem to learn in an entirely new and different way,” Carroll says. “To borrow from Pink Floyd, it is short, sharp shocks of knowledge ingested. They won’t sit down and read 50 pages of a textbook.” Sophisticated learning programs are one way to keep this generation engaged. “Millennials will be an increasing challenge for companies to attract and retain because of their high expectations,” says Bastian. “They’re not interested just in money but also in a career path and the opportunity for diverse experiences.”

By 2025, Millennials will make up 75% of the workforce, according to the Brookings Institution. Various studies have shown that Millennials crave learning and collaboration and will do whatever it takes to get the information they need expediently. “I’ve got two sons who are 20 and 22 and they seem to learn in an entirely new and different way,” Carroll says. “To borrow from Pink Floyd, it is short, sharp shocks of knowledge ingested. They won’t sit down and read 50 pages of a textbook.” Sophisticated learning programs are one way to keep this generation engaged. “Millennials will be an increasing challenge for companies to attract and retain because of their high expectations,” says Bastian. “They’re not interested just in money but also in a career path and the opportunity for diverse experiences.”

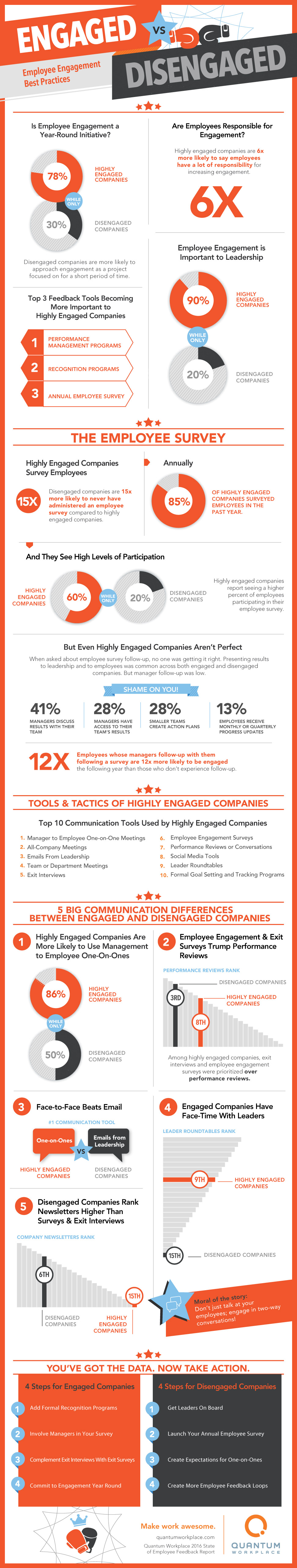

It’s risky to assume that your business isn’t in a prime spot for disruption (see “Corporate Learning Trends”). Companies will need to adapt or suffer the consequence of a disengaged and unprepared workforce. An Oxford Economics Workforce 2020 survey found that the top concern of employees is the risk of becoming obsolete; nearly 40% of North American respondents said that their current skills will not be adequate in three years, and only 41% of global respondents said that their companies are giving them opportunities to develop new skills.

Corporate Learning Trends

- Nearly 40% of North American respondents said that their current job skills will not be adequate in three years, with the majority agreeing that the need for technology skills, especially in analytics and programming, will grow.

- Less than half (47%) of executives say they have a culture of continuous learning. A similar percentage says that trouble finding employees with base-level skills is affecting their workforce strategy.

- Spending on technology education in the Americas will have a compound annual growth rate (CAGR) of4.2% from 2014 to 2019, with the highest growth in the United States for collaborative applications (11.9% CAGR), followed by data management applications (7.8%).

- The global e-learning market was worth US$24 billion in 2013, with predicted growth of $31.6 billion by 2018.

- Of the $31.6 billion predicted worldwide spend on corporate e-learning by 2018, $22.5 billion will be on content.

- A majority of chief learning officers (57%) say that learning technology is a significant priority for spending.

- In 2014, 32.6% of training was delivered through e-learning (asynchronous and synchronous); 30.4% took place in the classroom, 18.9% was on the job, and 18.1% was “other,” which includes video and text.

- E-learning is the preferred method for developing IT skills, said 34% of participants, compared with 29.2% for classroom training. For developing business skills, an overwhelming 57.3% chose classroom training.

Evolution of learning: personal, social, mobile, and continuous

Online courses have become a standard way to gain knowledge, and that’s shifting to even more interactive learning through mobile, which is available anywhere and anytime. Like many large companies, SAP had created a vast library over time of more than 50,000 training assets, which was cumbersome to navigate and manage. The curriculum was organized across regions, lines of business, and disciplines. As a result, mapping learning to broader business goals was difficult.

Online courses have become a standard way to gain knowledge, and that’s shifting to even more interactive learning through mobile, which is available anywhere and anytime. Like many large companies, SAP had created a vast library over time of more than 50,000 training assets, which was cumbersome to navigate and manage. The curriculum was organized across regions, lines of business, and disciplines. As a result, mapping learning to broader business goals was difficult.

To modernize its learning environment, SAP deployed a cloud-based learning management system and a social collaboration tool. Today, more than 74,000 employees can create personalized training through a combination of online self-study that incorporates video and documentation, social learning tools for exchanging ideas with other employees, and hands-on practice using SAP applications in a sandbox environment.

Now the company is engaging four times more employees in learning activities than it did with the older on-premise learning management system (LMS). The new approach is also creating between €35 million and €45 million in increased operating profit with just a 1% increase in engagement. Administrative costs have decreased by €600 per new content item added. Managers and employees alike can create and access learning paths much more easily and track progress from their personal pages. This integrated, simple-to-use online-learning approach is an example of how learning departments need to evolve to stay relevant.

There are several characteristics of digital learning transformation:

- Micro-learning. The concept of breaking lessons into smaller bites minimizes productivity disruptions and mirrors consumer behavior of watching three-minute videos and reading social media to get information on anything under the sun. Micro-learning is perfect for learning how to write a business plan, develop code in Ruby on Rails, or learn about a manufacturer’s latest appliance before a service call, for example. It can mean segmenting a longer course into small lessons, which the employee could view over lunch or in the evening from home. Several Alteris clients are now looking to deploy mobile learning apps, ideal for micro-learning, as the main delivery platform, says Bastian. These apps work best when integrated with the LMS and HR systems and push relevant material to users based on their learning profile.

- Self-serve learning. Just-in-time learning is critical when learning needs accelerate. Companies can help by providing continually updated tools and content that can be accessed from any device, at the moment of need. It’s the best way for learning departments to keep up with employees’ needs; you can schedule only so many Webinars and classroom training courses.

- Learning as entertainment. Gamification has been hot in marketing for a few years and is also a viable tool for corporate learning. New employees at Canadian telecommunications company TELUS earn badges as they complete different orientation tasks, such as creating a profile on the corporate social network. Leaders can spend eight weeks coaching a virtual Olympic speed-skating team, competing against colleagues to earn gold medals. Winning requires demonstrating the leadership behaviors that TELUS values.Training is also starting to incorporate virtual reality. For example, the U.S. military is using a gaming platform that incorporates avatars to create simulations that train soldiers to deal with dangerous or problematic situations. “This is more immersive and has the potential to help with the human connection failings of online learning,” says Joe Carella, managing director of executive education at the University of Arizona. Regardless of the method, adding an element of fun and recognition for reaching milestones is important for capturing the attention of younger workers who have grown up on games and apps.

- Social learning. Learning is an emotional experience and most people don’t want to be alone when they learn. In that regard, social media models can be profoundly valuable because they foster sharing and collaboration, which helps employees retain the knowledge they gain through formal training programs. That’s why social collaboration platforms have become as important to the overall learning strategy as the specific types of training delivery methods themselves.

- User-generated content. A common theme spanning all of the previously mentioned areas has played out in mainstream media and social media over the past few years. “What learners value the most today is the raw, user-created content over the highly polished corporate-created content,” says Elliott Masie, founder of The MASIE Center, a think tank focused on learning and knowledge in the workforce. “What’s really fascinating is that this trend is creating a town-square model where learners are ripe to learn from others.”

- Video. “Almost anyone can produce a training video, and it’s technically more convenient than ever before,” says Cushing Anderson, a VP and analyst focusing on HR and learning at IDC. “Digital learning is often about substituting convenience for perfect quality.”

Universities and MOOCs: What We’ve Learned So Far

Degrees and certifications have been going online through massive open online courses (MOOCs) for a few years, reflecting the changing needs of students as well as the escalating costs of traditional education.

Threatened with disruption from independent MOOC startups such as Coursera and Udacity, universities and colleges have scrambled to keep pace. More than 80% now offer several courses online and more than half offer a significant number of courses online, according to the EDUCAUSE Center for Analysis and Research. The survey found that more than two-thirds of academic leaders believe that online learning is critical to the long-term strategic mission of their institutions.

MOOCs have delivered a transformation of higher learning that wasn’t possible a decade ago, when access to a Harvard professor was available only to the elite few who had earned their place in those hallowed halls and who could afford the stratospheric tuition.

However, MOOCs have not been proven out yet as an effective replacement for traditional degrees, much less the acquisition of knowledge. Completion rates for courses are low, and MOOCs so far seem best suited for technical or tactical topics or as a supplement to the classroom, observes Joe Carella, managing director of executive education at the University of Arizona.

Yet MOOCs are playing a growing role in companies. Getting access to real business experts, such as a well-known speaker like Jim Collins, is especially valuable for a small or midsize business that couldn’t afford to hire that individual otherwise.

Making the shift

For decades, corporate learning departments have delivered education through a fairly narrow, top-down funnel: curriculum is designed months ahead of time and learning paths are structured for targeted roles in the organization. In moving toward accelerated, continuous learning, chief learning officers will need to help foster a culture of accountability and excitement around learning, as follows:

Develop a close alignment between learning departments and senior business leaders to understand skill gaps, customer needs, and employee shortfalls.

Develop a close alignment between learning departments and senior business leaders to understand skill gaps, customer needs, and employee shortfalls.- Become a content curator and take on a customer service role in the business.

- Ensure that learning is specific to the individual and relates to specific business and career goals.

- Have managers help by motivating and guiding employees through the tools, helping them develop personalized plans, and monitoring their progress.

In most cases, companies should be relatively hands-off when it comes to employee learning, says Eilif Trondsen, director of learning, innovation, and virtual technologies at Strategic Business Insights. “It is the responsibility of the workers to learn and acquire the needed skills and competencies for their jobs,” says Trondsen, “and it’s important to monitor the outcomes and not micromanage the process they use for getting there.”

However, it’s important that leaders motivate employees to learn by setting a good example. At TELUS, a company vice president started an internal online community and his own blog to share information about working in his division. The company views corporate learning not as curriculum but as a set of experiences, including classroom courses, online training, coaching, mentoring, and informal collaboration. TELUS measures the direct impact of learning through surveys of both employees and their managers. One metric reports on the learning tools that are most effective for acquiring different types of knowledge, while another measures return on performance from a specific learning program.

Measuring learning effectiveness is a difficult key performance indicator, just as customer engagement is, yet digital learning platforms often have built-in analytics to create a starting point. The analytics allows companies to run reports on usage to see what’s most effective and to retire those assets that aren’t being used. Ultimately, companies should work toward connecting the dots between learning outcomes and business outcomes, such as attrition, employee engagement, and sales growth.

The human equation of digital learning

Today and into the future, no matter the technology or method deployed, excellent learning depends on excellent instructors. They must have credibility with their audiences or the program will flop. For example, when Sun Microsystems (now owned by Oracle) first offered e-learning on its programming language, Java, customers balked because they wanted to know who the expert behind the course was, just like in a classroom. So Sun included a video introduction by the original developer of Java, James Gosling, and the program took off.

Another caution with digital learning is that it can never replace the five senses one gets in a physical setting and lacks spontaneity. “With e-learning, you can pause the course whenever you wish, but sometimes breakthroughs happen when you are out of your comfort zone and challenged,” Carella says. A discussion can merge into a novel direction in ways that don’t typically happen when people are chatting online. Ideally, online learning should be interspersed with in-person educational experiences, whether that’s attending a classroom training or meeting with a mentor.

Blending formal and informal training, as well as offline and online training, is a historical trend that will continue, says Masie, who also leads The Learning CONSORTIUM, a coalition of 230 global organizations, including CNN, Walmart, Starbucks, and American Express. Incorporating multiple modes of learning is critically important for gaining knowledge that sticks.

“A learner who isn’t motivated will sit in front of the screen and complete a course but may never actually develop the skill,” he says. To close the loop, managers and learning departments can develop a process that includes practice, feedback, and on-the-job experience.

The long-term goal of digital learning: grow the business

The long-term goal of digital learning: grow the business

As executives consider how learning and training should evolve, a grounding consideration is the level of commitment. Few companies spend enough on it, says IDC’s Anderson. Those with world-class training programs can gain an edge in hiring and possibly even in the market. Introducing innovative learning tools and programs that allow employees to study independently and experiment with new ideas is also motivating, which can lead to higher engagement, productivity gains, and even bottom-line benefits. In fact, says Masie, research has shown that organizations that invest at least 3% of income on learning have better stock performance and employee retention.

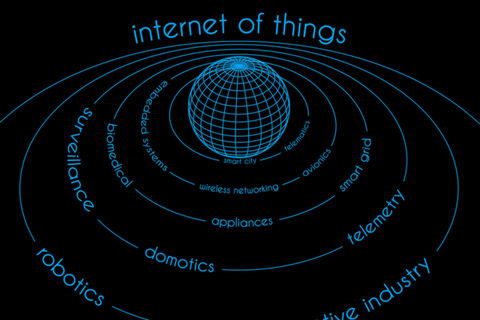

IoT technologies are beginning to drive new competitive advantage by helping consumers manage their lives (Amazon Echo), save money (Ôasys water usage monitoring), and secure their homes (August Smart Lock). The IoT also has the potential to save lives. In healthcare, this means streaming data from patient monitoring devices to keep caregivers informed of critical indicators or preventing equipment failures in the ER. In manufacturing, the IoT helps drive down the cost of production through real-time alerts on the shop floor that indicate machine issues and automatically correct problems. That means lower costs for consumers.

IoT technologies are beginning to drive new competitive advantage by helping consumers manage their lives (Amazon Echo), save money (Ôasys water usage monitoring), and secure their homes (August Smart Lock). The IoT also has the potential to save lives. In healthcare, this means streaming data from patient monitoring devices to keep caregivers informed of critical indicators or preventing equipment failures in the ER. In manufacturing, the IoT helps drive down the cost of production through real-time alerts on the shop floor that indicate machine issues and automatically correct problems. That means lower costs for consumers.

Where are the most exciting and viable opportunities right now for companies looking into IoT strategies to drive their business?

Where are the most exciting and viable opportunities right now for companies looking into IoT strategies to drive their business? Kavis: We have a lot less control over what is coming into companies from all these devices, which is creating many more openings for hackers to get inside an organization. There will be specialized security platforms and services to address this, and hardware companies are putting security on sensors in the field. The IoT offers great opportunities for security experts wanting to specialize in this area.

Kavis: We have a lot less control over what is coming into companies from all these devices, which is creating many more openings for hackers to get inside an organization. There will be specialized security platforms and services to address this, and hardware companies are putting security on sensors in the field. The IoT offers great opportunities for security experts wanting to specialize in this area.

The future of learning is here and it’s digital, social, continuous, and highly immersive. For companies, traditional training methods, such as classrooms, are still relevant, but they are no longer the prime delivery method for learning. They are slow to set up, are expensive, and consume too many productive hours. Many companies are beginning to view the classroom as a strategy for customized educational needs, such as corporate strategy or branding.

The future of learning is here and it’s digital, social, continuous, and highly immersive. For companies, traditional training methods, such as classrooms, are still relevant, but they are no longer the prime delivery method for learning. They are slow to set up, are expensive, and consume too many productive hours. Many companies are beginning to view the classroom as a strategy for customized educational needs, such as corporate strategy or branding. Uber, Netflix, Amazon, Airbnb, Bloom Energy, and health insurer Oscar are among the companies considered highly disruptive in their markets today. They achieved innovation and market share by looking ahead and taking advantage of new technologies faster than competitors or in novel ways. Digital learning enables companies to stay ahead of the curve. Companies need to understand the new technologies before they are even available, so that they can understand the impact on the business and even invent new business models.

Uber, Netflix, Amazon, Airbnb, Bloom Energy, and health insurer Oscar are among the companies considered highly disruptive in their markets today. They achieved innovation and market share by looking ahead and taking advantage of new technologies faster than competitors or in novel ways. Digital learning enables companies to stay ahead of the curve. Companies need to understand the new technologies before they are even available, so that they can understand the impact on the business and even invent new business models. We are now in an era that will rival the Industrial Age in terms of transformation. For example, a financial analyst today needs to know how to work with Big Data, including how to ask the right questions and how to use the related information systems. Jim Carroll, a speaker, consultant, and author on business transformation, uses the automotive industry as one rubric for change. “You’ve got folks who are struggling with all this new high-tech gear inside the car or the dashboard,” says Carroll. “And you look at a typical auto dealer or the person manufacturing a car, and the knowledge they need to do their job today is infinitely more complex than it was even 5 or 10 years ago.”

We are now in an era that will rival the Industrial Age in terms of transformation. For example, a financial analyst today needs to know how to work with Big Data, including how to ask the right questions and how to use the related information systems. Jim Carroll, a speaker, consultant, and author on business transformation, uses the automotive industry as one rubric for change. “You’ve got folks who are struggling with all this new high-tech gear inside the car or the dashboard,” says Carroll. “And you look at a typical auto dealer or the person manufacturing a car, and the knowledge they need to do their job today is infinitely more complex than it was even 5 or 10 years ago.” By 2025, Millennials will make up 75% of the workforce, according to the Brookings Institution. Various studies have shown that Millennials crave learning and collaboration and will do whatever it takes to get the information they need expediently. “I’ve got two sons who are 20 and 22 and they seem to learn in an entirely new and different way,” Carroll says. “To borrow from Pink Floyd, it is short, sharp shocks of knowledge ingested. They won’t sit down and read 50 pages of a textbook.” Sophisticated learning programs are one way to keep this generation engaged. “Millennials will be an increasing challenge for companies to attract and retain because of their high expectations,” says Bastian. “They’re not interested just in money but also in a career path and the opportunity for diverse experiences.”

By 2025, Millennials will make up 75% of the workforce, according to the Brookings Institution. Various studies have shown that Millennials crave learning and collaboration and will do whatever it takes to get the information they need expediently. “I’ve got two sons who are 20 and 22 and they seem to learn in an entirely new and different way,” Carroll says. “To borrow from Pink Floyd, it is short, sharp shocks of knowledge ingested. They won’t sit down and read 50 pages of a textbook.” Sophisticated learning programs are one way to keep this generation engaged. “Millennials will be an increasing challenge for companies to attract and retain because of their high expectations,” says Bastian. “They’re not interested just in money but also in a career path and the opportunity for diverse experiences.” Online courses have become a standard way to gain knowledge, and that’s shifting to even more interactive learning through mobile, which is available anywhere and anytime. Like many large companies, SAP had created a vast library over time of more than 50,000 training assets, which was cumbersome to navigate and manage. The curriculum was organized across regions, lines of business, and disciplines. As a result, mapping learning to broader business goals was difficult.

Online courses have become a standard way to gain knowledge, and that’s shifting to even more interactive learning through mobile, which is available anywhere and anytime. Like many large companies, SAP had created a vast library over time of more than 50,000 training assets, which was cumbersome to navigate and manage. The curriculum was organized across regions, lines of business, and disciplines. As a result, mapping learning to broader business goals was difficult. Develop a close alignment between learning departments and senior business leaders to understand skill gaps, customer needs, and employee shortfalls.

Develop a close alignment between learning departments and senior business leaders to understand skill gaps, customer needs, and employee shortfalls. The long-term goal of digital learning: grow the business

The long-term goal of digital learning: grow the business

Real-time analysis, together with emerging digital technologies and intelligent digital processes, have upended the workplace as we know it; and businesses are today subject to a deep cultural shift in work organisation, culture and management mind set. The impact is a shift towards workers looking at available information as opposed to ‘explorative surgery’ measures when the damage is already done.

Real-time analysis, together with emerging digital technologies and intelligent digital processes, have upended the workplace as we know it; and businesses are today subject to a deep cultural shift in work organisation, culture and management mind set. The impact is a shift towards workers looking at available information as opposed to ‘explorative surgery’ measures when the damage is already done.

The digital technologies that are transforming the world economy have converted once-solid industry boundaries into permeable membranes through which new players may enter—or exit. But for established firms, the smartest move right now may be to reinvent their existing markets rather than pursue ventures in unfamiliar business segments.We used S&P Global Market Intelligence’s Compustat database to examine the diversification behavior of 1,932 companies in 10 industries between 2007—the year the Android operating system and the iPhone debuted—and 2015. Only a handful of firms reported entering a new market segment or exiting an existing one. Analyzing the overall returns showed companies entering new business segments (as defined by the North American Industry Classification System) increased their revenue by an average US$437 million.

The digital technologies that are transforming the world economy have converted once-solid industry boundaries into permeable membranes through which new players may enter—or exit. But for established firms, the smartest move right now may be to reinvent their existing markets rather than pursue ventures in unfamiliar business segments.We used S&P Global Market Intelligence’s Compustat database to examine the diversification behavior of 1,932 companies in 10 industries between 2007—the year the Android operating system and the iPhone debuted—and 2015. Only a handful of firms reported entering a new market segment or exiting an existing one. Analyzing the overall returns showed companies entering new business segments (as defined by the North American Industry Classification System) increased their revenue by an average US$437 million.

Digital technologies are now fundamental to creating new business opportunities, observes Pontus Siren, a partner at innovation consulting firm Innosight. Companies are finding profitable ideas close to home by using new technologies to transform processes or capitalizing on data they capture from their existing businesses. For example, Disney’s MagicBand, the chip-enabled bracelet that patrons use to buy passes, food, and souvenirs, “is a great example [of] where they are not fundamentally changing the business, but they are transforming the experience,” Siren says.

Digital technologies are now fundamental to creating new business opportunities, observes Pontus Siren, a partner at innovation consulting firm Innosight. Companies are finding profitable ideas close to home by using new technologies to transform processes or capitalizing on data they capture from their existing businesses. For example, Disney’s MagicBand, the chip-enabled bracelet that patrons use to buy passes, food, and souvenirs, “is a great example [of] where they are not fundamentally changing the business, but they are transforming the experience,” Siren says. “The traditional idea of putting up barriers to entry and creating a unique, sustainable competitive advantage is not a winning approach anymore,” says Rogers. He argues that tying value generation to meeting evolving customer needs means that executives must be open to making investments that serve this value.

“The traditional idea of putting up barriers to entry and creating a unique, sustainable competitive advantage is not a winning approach anymore,” says Rogers. He argues that tying value generation to meeting evolving customer needs means that executives must be open to making investments that serve this value.